接續昨天,batch_size 可以控制一次產圖的數量,這在 ComfyUI 中也可以在 Empty Latent Image 或 StableCascade_EmptyLatentImage 中調,可以找一下試試。

extras.sampling_configs 中的 shift 可能是跟處理雜訊有關,待確認。timesteps 就是 steps 。

t_start 跟 timesteps 有關控制的可能是跟 denoise 有關,一直使用方便的 API 跟簡單的工具,反而不知道直接用用 torch 怎麼給參數了,日後若有研究再補上。

batch_size = 2

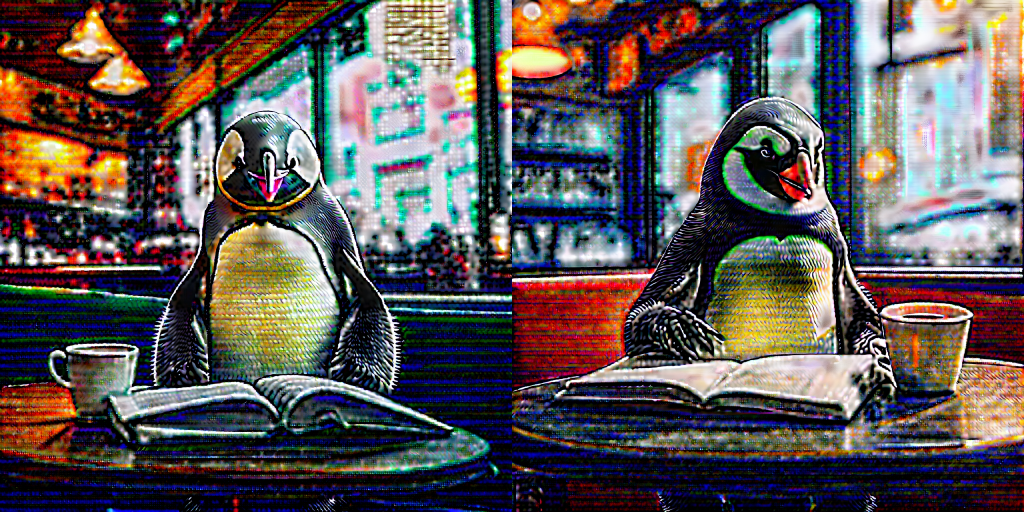

caption = "Cinematic photo of an anthropomorphic penguin sitting in a cafe reading a book and having a coffee"

height, width = 1024, 1024

stage_c_latent_shape, stage_b_latent_shape = calculate_latent_sizes(height, width, batch_size=batch_size)

# Stage C Parameters

extras.sampling_configs['cfg'] = 4

extras.sampling_configs['shift'] = 2

extras.sampling_configs['timesteps'] = 20

extras.sampling_configs['t_start'] = 1.0

# Stage B Parameters

extras_b.sampling_configs['cfg'] = 1.1

extras_b.sampling_configs['shift'] = 1

extras_b.sampling_configs['timesteps'] = 10

extras_b.sampling_configs['t_start'] = 1.0

# PREPARE CONDITIONS

batch = {'captions': [caption] * batch_size}

conditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=False, eval_image_embeds=False)

unconditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=True, eval_image_embeds=False)

conditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=False)

unconditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=True)

with torch.no_grad(), torch.cuda.amp.autocast(dtype=torch.float32):

# torch.manual_seed(42)

sampling_c = extras.gdf.sample(

models.generator, conditions, stage_c_latent_shape,

unconditions, device=device, **extras.sampling_configs,

)

for (sampled_c, _, _) in tqdm(sampling_c, total=extras.sampling_configs['timesteps']):

sampled_c = sampled_c

# preview_c = models.previewer(sampled_c).float()

# show_images(preview_c)

conditions_b['effnet'] = sampled_c

unconditions_b['effnet'] = torch.zeros_like(sampled_c)

sampling_b = extras_b.gdf.sample(

models_b.generator, conditions_b, stage_b_latent_shape,

unconditions_b, device=device, **extras_b.sampling_configs

)

for (sampled_b, _, _) in tqdm(sampling_b, total=extras_b.sampling_configs['timesteps']):

sampled_b = sampled_b

sampled = models_b.stage_a.decode(sampled_b).float()

show_images(sampled)

偶然發現一件事,把 cfg 調成 8 後影像變這樣,之前用 comfyUI 會有奇怪的紋理應該就是這造成的。

調回原本範例給的數值結果會是這樣,好看多了,所以得給 Stable Cascade 更多自主性? 或是拉高 cfg 時得將 Prompt 寫的更加詳細?

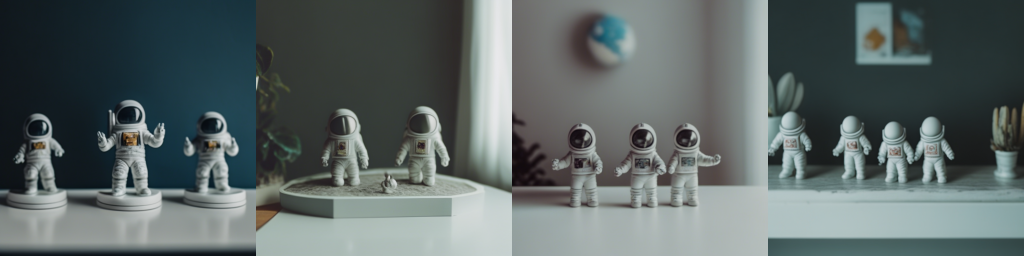

他還有一個變換功能,可以給一張圖,他會基於這張影像略為變換。

範例給的連結失效了,我改拿 huggingface 的測試用圖。如果也失效的話就把下面那張三個太空人的圖下載下來用吧。

batch_size = 4

url = "https://cdn-lfs.huggingface.co/datasets/huggingface/documentation-images/42f0ee242d8caaee1aea5506c8318c6a920d559a63c6db8d79f993eebaf7d790?response-content-disposition=inline%3B+filename*%3DUTF-8%27%27img2img-init.png%3B+filename%3D%22img2img-init.png%22%3B&response-content-type=image%2Fpng&Expires=1725463863&Policy=eyJTdGF0ZW1lbnQiOlt7IkNvbmRpdGlvbiI6eyJEYXRlTGVzc1RoYW4iOnsiQVdTOkVwb2NoVGltZSI6MTcyNTQ2Mzg2M319LCJSZXNvdXJjZSI6Imh0dHBzOi8vY2RuLWxmcy5odWdnaW5nZmFjZS5jby9kYXRhc2V0cy9odWdnaW5nZmFjZS9kb2N1bWVudGF0aW9uLWltYWdlcy80MmYwZWUyNDJkOGNhYWVlMWFlYTU1MDZjODMxOGM2YTkyMGQ1NTlhNjNjNmRiOGQ3OWY5OTNlZWJhZjdkNzkwP3Jlc3BvbnNlLWNvbnRlbnQtZGlzcG9zaXRpb249KiZyZXNwb25zZS1jb250ZW50LXR5cGU9KiJ9XX0_&Signature=Ft9aKO6umwkVeAdsSCdlYp7o9IFoAw%7EsBHxAkoOOQxJP89dhP8HRo3WNBU64Gj9UR65AwEL9FUfIWZ8Frr3DdyMqrFqUOIZjknuAxx7YJjnW4KaPtFkjaeeFFEiX4xVoI8WrtJwjTCnexu2acxgcQI469pBPgaasTNxpPKbVNOtmUHQm4%7EaLriuhoHecIkbWhNSCKL56B4gJFNCwgdNn4%7EU4NJZyNN3-GkHzCRnnL3pglp2mGRCtzdqXWcMsy5Ajo9tRCf5xrRdb4CwFEaZQ%7EhOboJPCasgS5pNfKtMR1i37xUCASPddsu2cA-AOjld9Pm8cdmvhZhEu%7EvwQyNAgNA__&Key-Pair-Id=K3ESJI6DHPFC7"

images = resize_image(download_image(url)).unsqueeze(0).expand(batch_size, -1, -1, -1).to(device)

batch = {'images': images}

show_images(batch['images'])

接下來測試看看吧。

caption = ""

height, width = 1024, 1024

stage_c_latent_shape, stage_b_latent_shape = calculate_latent_sizes(height, width, batch_size=batch_size)

# Stage C Parameters

extras.sampling_configs['cfg'] = 4

extras.sampling_configs['shift'] = 2

extras.sampling_configs['timesteps'] = 20

extras.sampling_configs['t_start'] = 1.0

# Stage B Parameters

extras_b.sampling_configs['cfg'] = 1.1

extras_b.sampling_configs['shift'] = 1

extras_b.sampling_configs['timesteps'] = 10

extras_b.sampling_configs['t_start'] = 1.0

# PREPARE CONDITIONS

batch['captions'] = [caption] * batch_size

with torch.no_grad(), torch.cuda.amp.autocast(dtype=torch.bfloat16):

# torch.manual_seed(42)

conditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=False, eval_image_embeds=True)

unconditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=True, eval_image_embeds=False)

conditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=False)

unconditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=True)

sampling_c = extras.gdf.sample(

models.generator, conditions, stage_c_latent_shape,

unconditions, device=device, **extras.sampling_configs,

)

for (sampled_c, _, _) in tqdm(sampling_c, total=extras.sampling_configs['timesteps']):

sampled_c = sampled_c

preview_c = models.previewer(sampled_c).float()

show_images(preview_c)

conditions_b['effnet'] = sampled_c

unconditions_b['effnet'] = torch.zeros_like(sampled_c)

sampling_b = extras_b.gdf.sample(

models_b.generator, conditions_b, stage_b_latent_shape,

unconditions_b, device=device, **extras_b.sampling_configs

)

for (sampled_b, _, _) in tqdm(sampling_b, total=extras_b.sampling_configs['timesteps']):

sampled_b = sampled_b

sampled = models_b.stage_a.decode(sampled_b).float()

show_images(sampled)

成品長這樣:

最後是圖生圖,我一樣用太空人的影像。

batch_size = 2

url = "https://cdn-lfs.huggingface.co/datasets/huggingface/documentation-images/42f0ee242d8caaee1aea5506c8318c6a920d559a63c6db8d79f993eebaf7d790?response-content-disposition=inline%3B+filename*%3DUTF-8%27%27img2img-init.png%3B+filename%3D%22img2img-init.png%22%3B&response-content-type=image%2Fpng&Expires=1725463863&Policy=eyJTdGF0ZW1lbnQiOlt7IkNvbmRpdGlvbiI6eyJEYXRlTGVzc1RoYW4iOnsiQVdTOkVwb2NoVGltZSI6MTcyNTQ2Mzg2M319LCJSZXNvdXJjZSI6Imh0dHBzOi8vY2RuLWxmcy5odWdnaW5nZmFjZS5jby9kYXRhc2V0cy9odWdnaW5nZmFjZS9kb2N1bWVudGF0aW9uLWltYWdlcy80MmYwZWUyNDJkOGNhYWVlMWFlYTU1MDZjODMxOGM2YTkyMGQ1NTlhNjNjNmRiOGQ3OWY5OTNlZWJhZjdkNzkwP3Jlc3BvbnNlLWNvbnRlbnQtZGlzcG9zaXRpb249KiZyZXNwb25zZS1jb250ZW50LXR5cGU9KiJ9XX0_&Signature=Ft9aKO6umwkVeAdsSCdlYp7o9IFoAw%7EsBHxAkoOOQxJP89dhP8HRo3WNBU64Gj9UR65AwEL9FUfIWZ8Frr3DdyMqrFqUOIZjknuAxx7YJjnW4KaPtFkjaeeFFEiX4xVoI8WrtJwjTCnexu2acxgcQI469pBPgaasTNxpPKbVNOtmUHQm4%7EaLriuhoHecIkbWhNSCKL56B4gJFNCwgdNn4%7EU4NJZyNN3-GkHzCRnnL3pglp2mGRCtzdqXWcMsy5Ajo9tRCf5xrRdb4CwFEaZQ%7EhOboJPCasgS5pNfKtMR1i37xUCASPddsu2cA-AOjld9Pm8cdmvhZhEu%7EvwQyNAgNA__&Key-Pair-Id=K3ESJI6DHPFC7"

images = resize_image(download_image(url)).unsqueeze(0).expand(batch_size, -1, -1, -1).to(device)

batch = {'images': images}

show_images(batch['images'])

稍微改掉原本範例的 Prompt,畢竟原本的連結也失效了,我換一個比較符合測試圖的。

caption = "three cowboy"

noise_level = 0.8

height, width = 1024, 1024

stage_c_latent_shape, stage_b_latent_shape = calculate_latent_sizes(height, width, batch_size=batch_size)

effnet_latents = core.encode_latents(batch, models, extras)

t = torch.ones(effnet_latents.size(0), device=device) * noise_level

noised = extras.gdf.diffuse(effnet_latents, t=t)[0]

# Stage C Parameters

extras.sampling_configs['cfg'] = 4

extras.sampling_configs['shift'] = 2

extras.sampling_configs['timesteps'] = int(20 * noise_level)

extras.sampling_configs['t_start'] = noise_level

extras.sampling_configs['x_init'] = noised

# Stage B Parameters

extras_b.sampling_configs['cfg'] = 1.1

extras_b.sampling_configs['shift'] = 1

extras_b.sampling_configs['timesteps'] = 10

extras_b.sampling_configs['t_start'] = 1.0

# PREPARE CONDITIONS

batch['captions'] = [caption] * batch_size

with torch.no_grad(), torch.cuda.amp.autocast(dtype=torch.bfloat16):

# torch.manual_seed(42)

conditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=False, eval_image_embeds=False)

unconditions = core.get_conditions(batch, models, extras, is_eval=True, is_unconditional=True, eval_image_embeds=False)

conditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=False)

unconditions_b = core_b.get_conditions(batch, models_b, extras_b, is_eval=True, is_unconditional=True)

sampling_c = extras.gdf.sample(

models.generator, conditions, stage_c_latent_shape,

unconditions, device=device, **extras.sampling_configs,

)

for (sampled_c, _, _) in tqdm(sampling_c, total=extras.sampling_configs['timesteps']):

sampled_c = sampled_c

preview_c = models.previewer(sampled_c).float()

show_images(preview_c)

conditions_b['effnet'] = sampled_c

unconditions_b['effnet'] = torch.zeros_like(sampled_c)

sampling_b = extras_b.gdf.sample(

models_b.generator, conditions_b, stage_b_latent_shape,

unconditions_b, device=device, **extras_b.sampling_configs

)

for (sampled_b, _, _) in tqdm(sampling_b, total=extras_b.sampling_configs['timesteps']):

sampled_b = sampled_b

sampled = models_b.stage_a.decode(sampled_b).float()

show_images(batch['images'])

show_images(sampled)

成品:

為了省資源,我用的是 small-small 版本,大模型的精細度應該會更好。